NeuroSync — An Immersive Human–AI Vision Experience

A conceptual experience that dissolves boundaries between human perception, algorithms, and AI by integrating digital sensations directly into your sight.

See It in Action

Watch how the prototype works in real time.

Seamless Human-Machine Symbiosis through Vision

Neurosync imagines a future where human perception and machine reasoning are no longer separate processes. Instead, they are rendered as a single, unified experience. Through real-time visual overlays, the system augments your field of vision not by adding clutter, but by subtly merging cognitive outputs from both human and machine.

By using your gaze as the sole input, Cognisync creates a responsive visual interface that does not demand your attention—it follows it. This project explores what it means for technology to become not just supportive, but truly symbiotic.

Concept overview

Core Design Questions

How can machine reasoning occur in sync with human perception?

What shifts when attention, decision-making, and creativity are co-authored by machines?

Technical Landscape

Personal Motivation

What principles guide interface invisibility, where information is displayed in a not overwhelming way?

When both the brain and the machine are thinking simultaneously, where do we draw the line of control?

Augmented and mixed reality systems have evolved rapidly, but most applications remain display-driven — showing maps, messages, or menus in our view. While useful, these interfaces often treat the human as a passive receiver.

Our era is marked by the transition from tool use to system integration. We are moving from separation between humans and machines toward symbiosis. This shift challenges long-standing metaphors of technology as external

My interest in cognitive augmentation began with research into brain-computer interfaces and AR design. I noticed a recurring pattern: machines enhanced us, but rarely merged with us. Neurosync is my attempt to step away from the “tool” mindset and instead explore what it means to design with an intelligent partner.

Design Overview

Interaction Principles

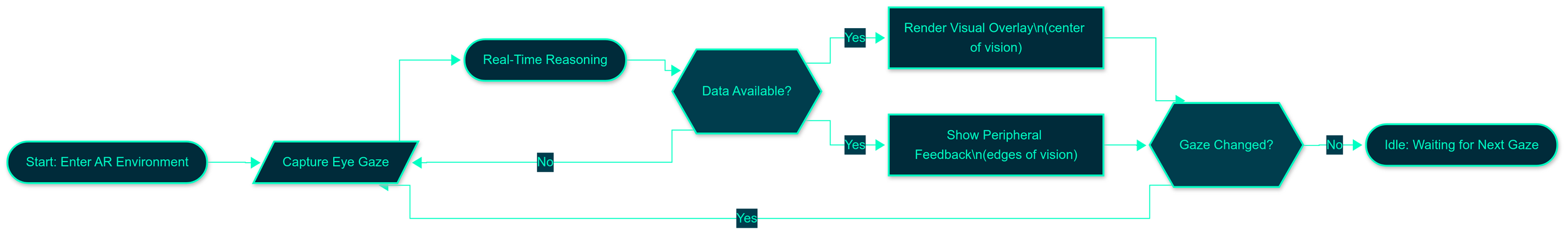

Gaze Detection: User looks at an object; the system immediately recognizes the focal point.

Real-Time Reasoning: The system pulls relevant data and computes contextual insights.

Visual Overlay: The insights appear in the center of vision, no matter where the user is looking.

Peripheral Feedback: Algorithm confidence and data credibility float subtly around the edges.

item recognition flow chart

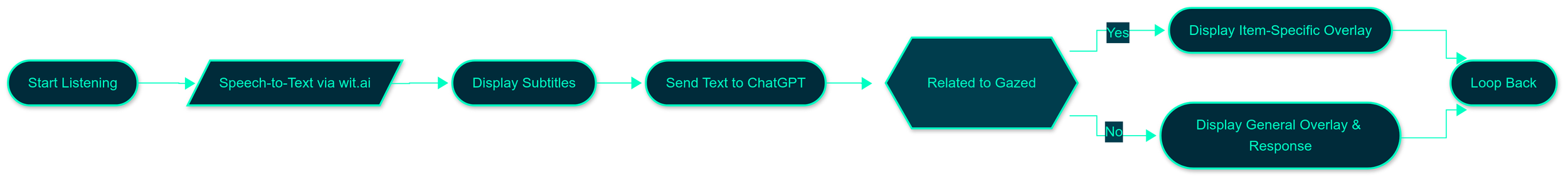

Chat integration flow chart

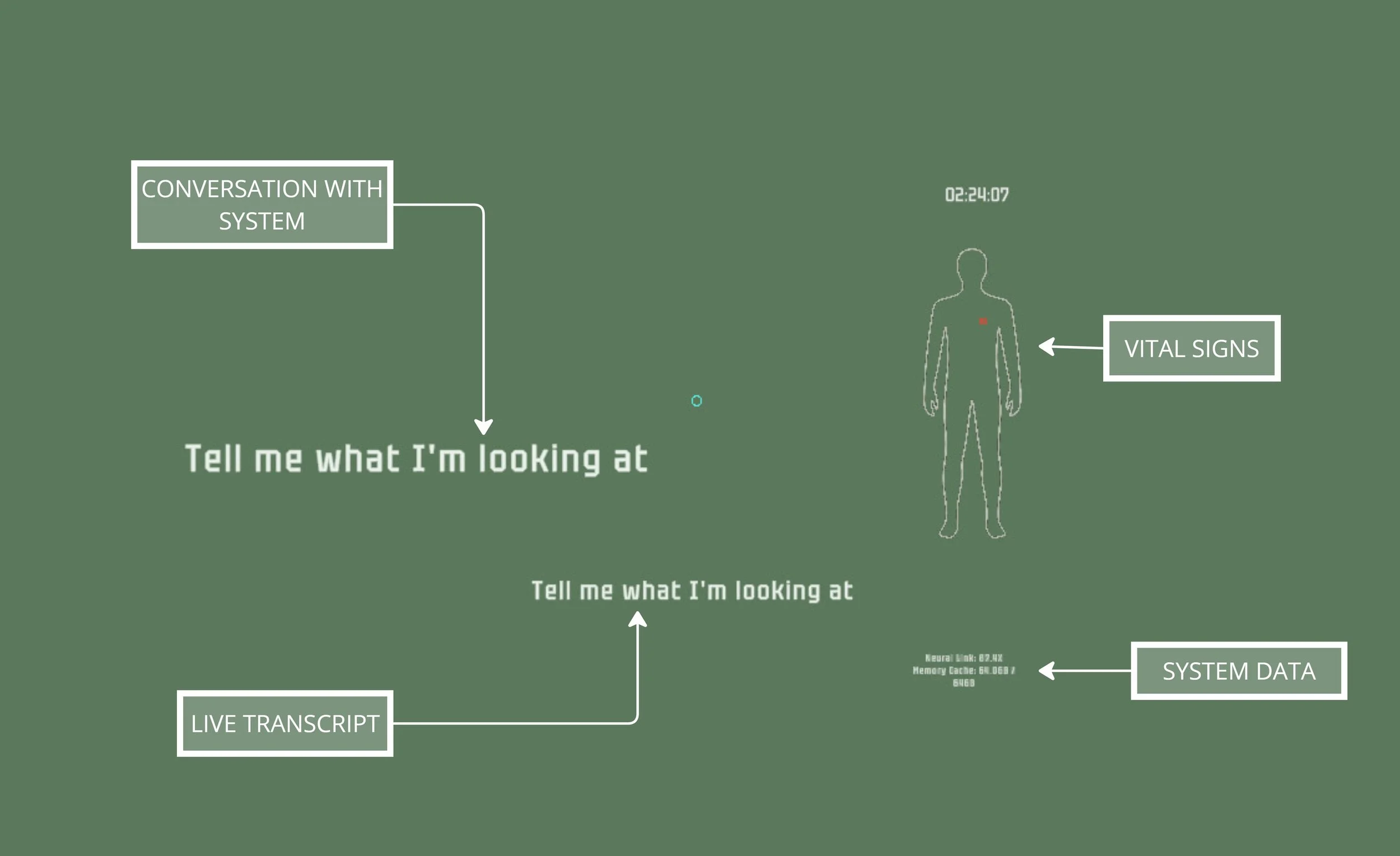

INterface explained

NO TOUCH. NO GESTURES. NO MENUS

The system is purely driven by gaze and internal logic. It’s designed to feel like a co-pilot, not a dashboard.